Llama 2 (13B) vs Grok: A Comprehensive Comparison

In the field of artificial intelligence, model architecture and capabilities significantly influence their performance and usability. This comparison delves into two prominent AI models: Llama 2 (13B) and Grok. By exploring their features, performance, user experience, and pricing, we aim to provide software engineers and AI enthusiasts with a comprehensive understanding to help them make informed decisions.

Understanding the Basics: Llama 2 (13B) and Grok

What is Llama 2 (13B)?

Llama 2 (13B) is the latest evolution in the series of Llama models developed by Meta, designed to process and generate human-like text with remarkable fluency. With 13 billion parameters, it strikes a balance between efficiency and capability, allowing it to tackle a variety of natural language processing tasks effectively.

The architecture of Llama 2 employs advanced transformer techniques that enhance contextual understanding and coherence in generated texts. This model can be employed in numerous applications, from chatbots and content creation to more complex reasoning tasks. Its ability to understand nuances in language makes it particularly useful for applications requiring a high degree of precision, such as legal document analysis or medical report generation. Additionally, Llama 2 is designed to minimize biases in its outputs, a crucial feature for ensuring ethical AI deployment across diverse industries.

What is Grok?

Grok, on the other hand, represents an innovative leap in AI reasoning and conversational capabilities. Developed with an emphasis on interactive dialogue and understanding intentions behind user queries, Grok leverages advanced machine learning algorithms to refine its responses based on context and user interaction.

With a focus on adaptability, Grok can be trained further on specialized datasets, making it an attractive option for businesses looking to enhance their customer interaction strategies. Its core design supports real-time learning and adjustment, which positions it as a competitive entity in the AI landscape. Moreover, Grok's ability to analyze sentiment and emotional tone allows it to engage users in a more meaningful way, fostering a sense of connection and understanding. This capability is particularly beneficial in sectors like customer service and mental health support, where empathetic communication is essential. As it evolves, Grok is expected to incorporate even more sophisticated features, such as multi-modal interaction, which would enable it to process not just text but also voice and visual inputs, broadening its applicability across various platforms and devices.

Key Features of Llama 2 (13B) and Grok

Unique Features of Llama 2 (13B)

One of the defining characteristics of Llama 2 (13B) is its optimized architecture that provides a high level of understanding within various contexts. The model is designed to minimize biases and ensure ethical AI outputs through continuous updates and refinements based on user feedback. This commitment to ethical AI is particularly important in today's landscape, where concerns about AI accountability and transparency are paramount.

- Robust Contextual Understanding: Capable of maintaining context over longer queries than its predecessors.

- High Efficiency: Executes computations quickly, enabling faster response times in applications.

- Diverse Use Cases: Supports various applications, from chatbots to coding assistants, making it adaptable for different domains.

Moreover, Llama 2 (13B) incorporates advanced natural language processing techniques that allow it to generate more coherent and contextually relevant responses. This is achieved through a combination of deep learning algorithms and extensive training on diverse datasets, which not only enhances its linguistic capabilities but also allows it to engage in more meaningful conversations. As a result, users can expect a more intuitive interaction, whether they're seeking information, assistance, or simply engaging in casual dialogue.

Unique Features of Grok

Grok stands out for its emphasis on conversational flow and user engagement. Its training involves extensive dialogue datasets, which grants it the ability to interpret nuances in user input effectively. Some notable features include:

- Real-Time Learning: Adapts its responses based on user interactions, enhancing the overall experience.

- Intent Recognition: Offers advanced mechanisms to understand user motivations beyond simple queries.

- Customizability: Businesses can fine-tune the model according to specific needs, ensuring relevance in customer interactions.

In addition to these features, Grok's architecture is designed to foster a more human-like interaction, making it particularly effective in customer service applications. By leveraging sentiment analysis, Grok can gauge the emotional tone of user inputs, allowing it to respond with empathy and understanding. This capability not only improves user satisfaction but also builds trust in AI interactions, which is crucial in maintaining long-term customer relationships. Furthermore, Grok's ability to integrate with existing platforms makes it a versatile choice for businesses looking to enhance their digital communication strategies.

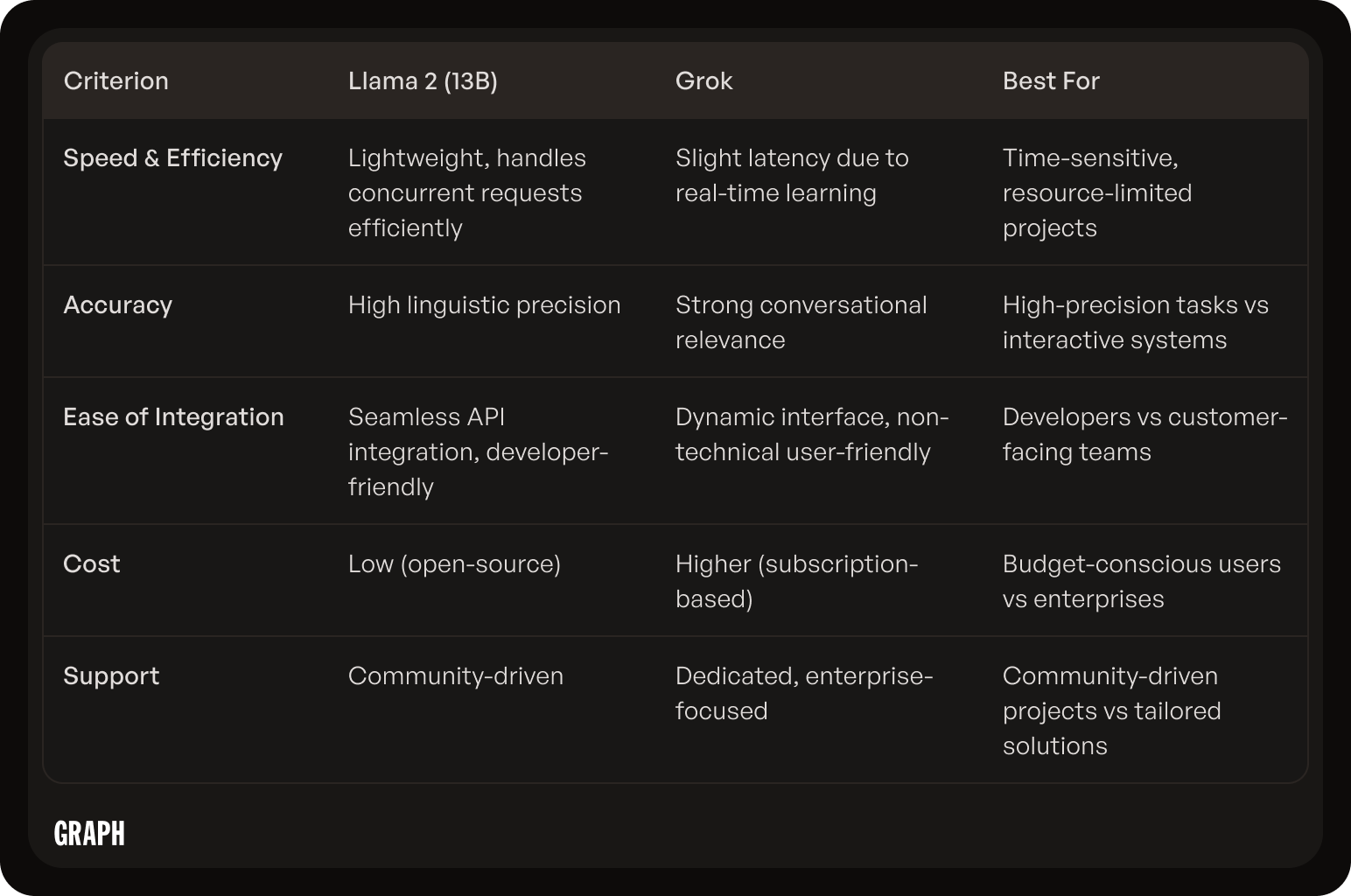

Performance Analysis: Llama 2 (13B) vs Grok

Speed and Efficiency

When examining performance, speed and efficiency are critical metrics. Llama 2 (13B) streamlines operations with its lightweight architecture, which makes it suitable for deployment across various platforms. Its capability to handle concurrent requests without significant delay is a key advantage for businesses requiring rapid response times.

Conversely, Grok, while slightly heavier in terms of processing requirements due to its adaptive learning processes, still provides commendable response speeds. Its ability to learn in real-time, however, can introduce variability in latency, especially when dealing with diverse user queries. This dynamic learning capability allows Grok to refine its responses based on user interactions, which can be particularly beneficial in environments where user preferences evolve rapidly, such as customer support or interactive applications.

Accuracy and Precision

In the domain of accuracy, both models display strengths but cater to different user needs. Llama 2 (13B) excels in generating coherent and contextually relevant responses, making it a reliable choice for tasks that demand high levels of linguistic precision. This model's training on extensive datasets ensures that it can produce nuanced language, which is especially valuable in creative writing, academic research, and professional communication.

On the other hand, Grok’s sharp focus on conversational context allows it to perform exceptionally well in dialogue-based environments. Its ability to identify user intent means that while it may not always produce text that is as precisely articulated as Llama 2, it often hits the mark regarding user satisfaction and relevance. This adaptability can lead to a more engaging user experience, as Grok can adjust its tone and style based on the ongoing conversation, making it a strong contender for applications in social media management and personal virtual assistants. Additionally, Grok's continuous learning mechanism enables it to stay updated with current trends and language usage, further enhancing its relevance in fast-paced digital interactions.

User Experience: Llama 2 (13B) vs Grok

Interface and Usability

The user interface and overall usability can significantly impact the adoption of an AI model. Llama 2 (13B) offers a straightforward setup for developers, featuring compatible APIs that facilitate integration into existing software systems seamlessly. The documentation is well-structured, providing clear examples and use cases that empower developers to implement the model effectively. This ease of use is particularly beneficial for teams with tight deadlines or limited resources, as it minimizes the learning curve and accelerates deployment.

In contrast, Grok provides a more dynamic interface designed for engagement. It allows users to interact with the AI in a more naturalistic way, making it particularly well-suited for customer service applications. The design prioritizes user experience, making it easier for non-technical users to leverage its capabilities without extensive training. Additionally, Grok incorporates interactive tutorials and real-time feedback mechanisms that enhance user confidence and competence. This focus on user engagement not only improves satisfaction but also encourages users to explore the full range of features available, fostering a deeper understanding of the AI's potential.

Customer Support and Service

Support services can be a decisive factor when choosing between AI models. Llama 2 (13B) benefits from the strength of Meta’s support network, offering extensive documentation, community forums, and professional support options. The community-driven aspect allows users to share insights, troubleshoot issues, and collaborate on best practices, creating a rich ecosystem of knowledge that can be invaluable for developers facing challenges during implementation.

Grok’s support model is tailored to ensure businesses can derive maximum value from their product, frequently providing dedicated account managers and comprehensive onboarding resources. This personalized approach can help users navigate any complexities associated with AI integration and implementation. Furthermore, Grok often conducts regular check-ins and feedback sessions to assess user satisfaction and gather insights for continuous improvement. This proactive support strategy not only addresses immediate concerns but also fosters a long-term partnership that can adapt to evolving business needs and technological advancements. By investing in user success, Grok positions itself as a reliable ally in the journey of AI adoption, ensuring that clients feel supported every step of the way.

Pricing: Llama 2 (13B) vs Grok

Cost of Llama 2 (13B)

The pricing for Llama 2 (13B) is structured to accommodate a wide range of users, from independent developers to large enterprises. As an open-source offering by Meta, it provides the foundational model for free, but operational costs can arise from server usage, data storage, and any additional customization or support required.

This economic approach allows developers to scale their usage according to their needs, making it a flexible option for budget-conscious projects. Additionally, the open-source nature of Llama 2 encourages a vibrant community of developers who contribute to its ongoing improvement and optimization, which can lead to reduced costs over time as shared resources and knowledge become available. Users can tap into community-driven plugins and enhancements, further lowering the barrier to entry for innovative applications.

Cost of Grok

Grok typically operates on a subscription or usage-based pricing model. While it may involve higher initial costs than Llama 2 due to its enterprise-focused features, the potential for substantial ROI through improved customer interactions can justify the investment for many organizations. The platform is designed with advanced analytics and reporting tools that help businesses track performance metrics, allowing them to make data-driven decisions that can enhance their operational efficiency.

Moreover, Grok often includes various tiers of service packages, ensuring that businesses can select a plan tailored to their specific requirements and scale as they grow. This flexibility is particularly advantageous for startups and mid-sized companies that may experience fluctuating needs. Additionally, Grok's dedicated support and training services can help organizations maximize their investment, ensuring that teams are well-equipped to leverage the AI's capabilities effectively. This comprehensive support structure can significantly reduce the learning curve associated with implementing advanced AI solutions, making it an attractive option for those looking to integrate AI into their workflows seamlessly.

Final Verdict: Which is Better?

Pros and Cons of Llama 2 (13B)

Pros:

- High linguistic coherence, making it suitable for diverse applications.

- Cost-effective due to its open-source nature.

- Highly efficient and scalable for varying project sizes.

Cons:

- Less emphasis on real-time learning compared to Grok.

- May require more elbow grease for customization.

Pros and Cons of Grok

Pros:

- Excellent adaptability and responsiveness to user intent.

- Strong focus on user engagement and conversational quality.

- Comprehensive customer support services.

Cons:

- Higher costs associated with advanced features and services.

- Potential performance variability due to real-time learning mechanisms.

Making the Final Choice

Ultimately, the choice between Llama 2 (13B) and Grok will depend on the specific requirements of the project at hand. For those needing a cost-effective, linguistically focused tool capable of handling a variety of tasks, Llama 2 (13B) presents a compelling solution.

Conversely, organizations aiming to enhance user interactions through personalized, adaptive dialogue will find Grok an invaluable asset. By carefully weighing the pros and cons of each model, developers can determine the best fit for their needs and leverage their strengths to create impactful AI-driven applications.

In addition to the technical specifications, it’s essential to consider the community and ecosystem surrounding these models. Llama 2 (13B), being open-source, benefits from a vibrant community of developers and researchers who contribute to its continuous improvement. This collaborative environment fosters innovation, allowing users to share insights, tools, and customizations that can enhance the model's performance in real-world applications. Furthermore, the open-source nature encourages transparency, which can be a significant advantage for organizations concerned about data privacy and ethical AI usage.

On the other hand, Grok's proprietary framework offers a polished user experience, often accompanied by dedicated resources for onboarding and troubleshooting. This can be particularly beneficial for businesses that may lack the technical expertise to fully leverage an open-source solution. The investment in Grok may yield a more streamlined deployment process and immediate access to cutting-edge features, making it an attractive option for companies looking to implement advanced AI functionalities quickly and efficiently.