Llama Advanced Guide: Expert Tips and Techniques

Welcome to the advanced guide on Llama, where we delve deep into the nuances of utilizing this cutting-edge technology. Whether you're new to Llama or looking to refine your skills, this guide promises to enhance your understanding and expertise. We will explore foundational concepts, advanced techniques, expert tips, and even speculate on the future of Llama.

Understanding the Basics of Llama

Defining Llama: What It Is and What It Isn't

Llama is a state-of-the-art model developed for generating human-like text based on a variety of inputs. Its impressive capabilities in understanding and generating natural language make it a powerful tool for different applications, from chatbots to content generation. However, it's essential to clarify what Llama is not. It does not possess genuine understanding or consciousness. It merely processes and outputs text based on patterns learned from vast datasets.

The underlying architecture combines deep learning techniques, particularly in the realm of natural language processing (NLP). This means Llama can provide coherent and contextually relevant answers, making it initially seem as if it understands the content being discussed. The model's ability to mimic human-like responses can lead to fascinating interactions, but users must remain aware that these interactions are the result of sophisticated algorithms rather than true comprehension. Moreover, Llama's responses are influenced by the quality and diversity of the data it was trained on, which can sometimes lead to unexpected or biased outputs if not carefully managed.

The Evolution of Llama: A Brief History

The evolution of Llama can be traced back to several key milestones in machine learning and natural language processing. Starting with early models in the 1950s, significant advancements were made in the 1980s and 1990s with the introduction of neural networks and support vector machines. However, it wasn’t until the release of transformer architecture in 2017 that the field truly exploded.

Following the introduction of transformers, various models began to emerge. Llama is a product of this progression, showcasing refined architectures that enable it to understand and generate text more efficiently than its predecessors. Continuous improvements in computational power and algorithmic sophistication have allowed Llama to reach unprecedented levels of performance. The training process involves feeding the model vast amounts of text data, which helps it learn context, syntax, and semantics. This extensive training phase is crucial, as it allows Llama to generate responses that are not only relevant but also nuanced, capturing the subtleties of human language. As researchers continue to innovate, we can expect future iterations of Llama to become even more adept at handling complex queries and engaging in more sophisticated dialogues.

Advanced Techniques for Llama

Optimizing Llama for Efficiency

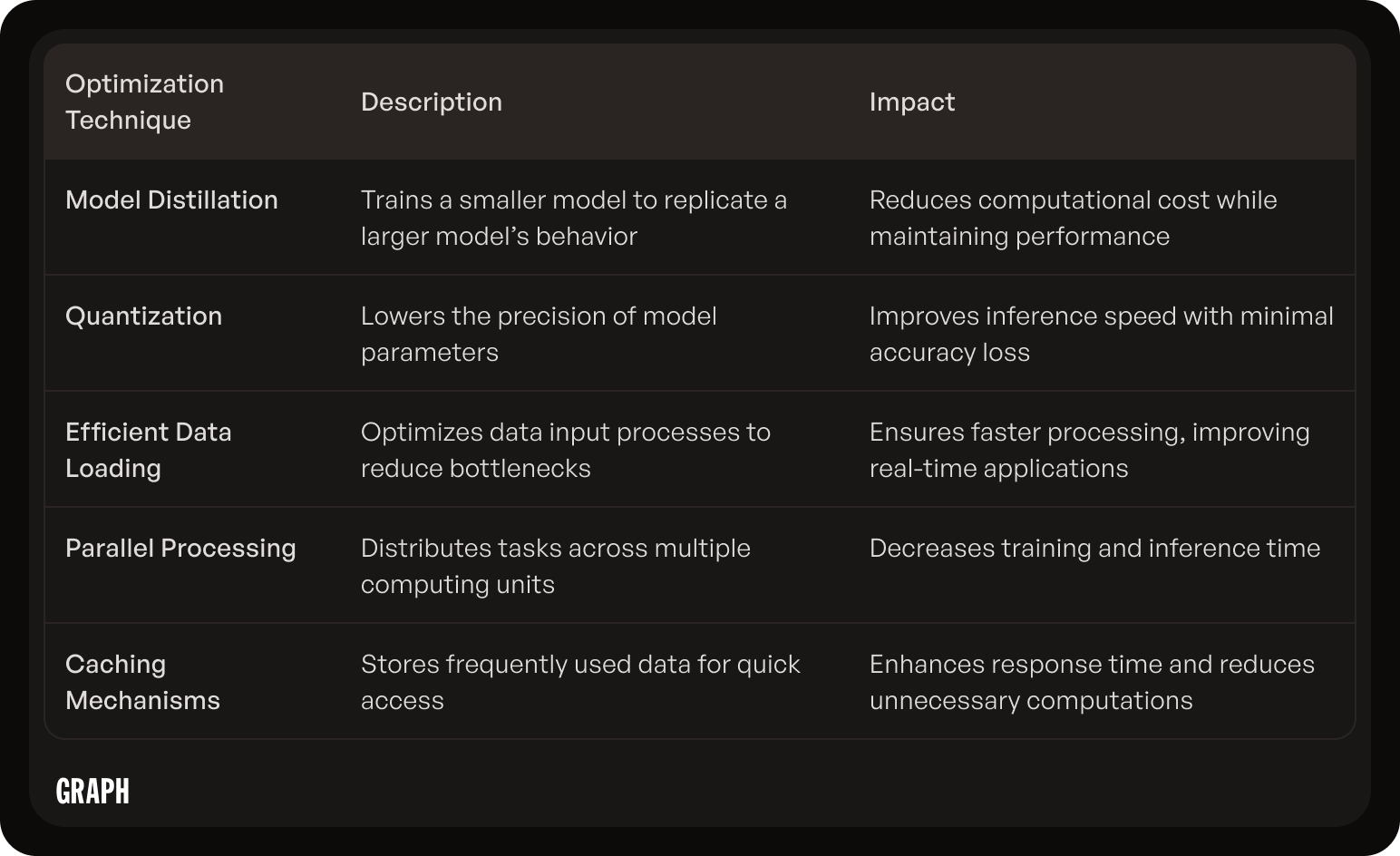

When working with Llama, optimizing for efficiency is crucial, particularly when dealing with large datasets or real-time requirements. Some strategies to consider include:

- Model Distillation: This technique reduces the size of the model while preserving its performance by training a smaller model to emulate a larger one.

- Quantization: This process involves reducing the precision of the model's parameters, which can speed up inference times without significantly impacting accuracy.

- Efficient Data Loading: Streamlining your data processing pipeline can minimize bottlenecks, allowing Llama to operate seamlessly even under heavy loads.

Incorporating these optimizations not only boosts performance but can also reduce operational costs, making it a win-win scenario for developers and businesses alike. Additionally, leveraging parallel processing techniques can further enhance efficiency. By distributing tasks across multiple processors or nodes, you can significantly decrease the time required for training and inference, especially in environments where latency is a critical factor. Furthermore, implementing caching strategies for frequently accessed data can alleviate the pressure on your data pipeline, ensuring that your system remains responsive and efficient even during peak usage times.

Advanced Programming for Llama

Programming with Llama requires a deeper understanding of the libraries and frameworks often used in conjunction with this technology, such as TensorFlow and PyTorch. To harness its capabilities, consider the following advanced programming techniques:

- Custom Training Loops: Instead of relying on default training functionalities, crafting custom training loops can provide greater control over training parameters and model adjustments.

- Fine-Tuning Specific Use-Cases: Tailoring the model's training on relevant datasets to align closely with your specific application can drastically improve accuracy and performance.

- Implementing Transfer Learning: Employing transfer learning allows developers to leverage pre-trained models, significantly reducing training times and resource consumption.

These advanced programming techniques can open new avenues for the effective implementation of Llama in projects, ensuring that software engineers can produce robust and responsive systems. Moreover, integrating automated hyperparameter tuning can lead to further enhancements in model performance. By utilizing libraries such as Optuna or Ray Tune, developers can efficiently explore a wide range of hyperparameter configurations, ultimately identifying the optimal settings for their specific tasks. Additionally, incorporating visualization tools to monitor training progress and performance metrics can provide valuable insights, enabling developers to make informed adjustments and improvements throughout the development lifecycle.

Expert Tips for Llama

Best Practices for Llama Implementation

Implementing Llama effectively requires adherence to several best practices. Firstly, ensure that the training dataset is diverse and representative of the end-user demographic to avoid biases and improve overall performance. Additionally, maintaining clear documentation throughout the development process aids in future maintenance and team collaborations. This documentation should include not only the technical specifications but also the rationale behind design choices, which can be invaluable for onboarding new team members and for future iterations of the project.

Next, regularly testing the model with real-world scenarios can help identify potential issues early. This allows for refinements and adjustments before the model is deployed. Consider setting up a feedback loop with end-users to gather insights on their experiences and expectations. This feedback can be instrumental in fine-tuning the model's responses and ensuring it aligns with user needs. Finally, establish a monitoring system to evaluate the performance continually post-deployment, ensuring you can respond proactively to any unexpected behaviors. Implementing analytics tools can provide valuable data on user interactions, helping to inform further improvements.

Troubleshooting Common Llama Issues

Even the most advanced systems can encounter issues. Below are some common problems with troubleshooting steps:

- Inaccurate Responses: If Llama is generating irrelevant answers, consider re-evaluating your training data for quality and relevance. Fine-tuning with specific datasets may also help. Additionally, incorporating user feedback can provide insights into areas where the model may be falling short, allowing for targeted improvements.

- Performance Bottlenecks: Use profiling tools to analyze where the slowdowns occur. Optimizing model architecture and implementing caching mechanisms can enhance performance. It may also be beneficial to explore parallel processing techniques, which can significantly reduce response times by distributing the workload across multiple processors.

- Model Drift: Over time, user inputs change, which can make your model less effective. Regularly updating your training data and retraining the model can mitigate this challenge. Establishing a routine for data collection and model evaluation will ensure that your AI remains relevant and effective in addressing user queries.

By understanding and addressing these common issues, developers can maintain the integrity and effectiveness of their Llama implementations. Additionally, fostering a culture of continuous learning and adaptation within your team can lead to innovative solutions and enhancements, ensuring that your AI remains at the forefront of technology and user satisfaction.

Future of Llama

Predicted Trends in Llama

The future of Llama is promising, with several predicted trends shaping its development. One significant trend is the increasing demand for more explainable AI. Stakeholders are now placing a greater emphasis on understanding how models come to specific conclusions, driving innovation in this direction. As a result, researchers are exploring new methodologies that can provide insights into the decision-making processes of Llama, such as attention mechanisms and visualization techniques. This focus not only enhances trust among users but also facilitates compliance with regulatory frameworks that require transparency in AI systems.

Additionally, as businesses increasingly adopt AI, the integration of ethical considerations into AI models will become paramount. This includes ensuring fairness and transparency in AI decision-making processes. Companies are beginning to implement ethical guidelines and frameworks that govern the development and deployment of AI technologies, which can help mitigate biases that may arise from training data. Furthermore, the establishment of diverse teams during the development phase can lead to more inclusive AI solutions that cater to a broader audience, ultimately enhancing user satisfaction and engagement.

Preparing for the Next Generation of Llama

To prepare for future generations of Llama, developers should stay updated with emerging research and advancements in NLP and deep learning. Participating in AI forums, workshops, and conferences can provide insights into upcoming changes and innovations. Engaging with the academic community can also lead to collaborative research opportunities that push the boundaries of what Llama can achieve. By staying connected with thought leaders in the field, developers can gain access to cutting-edge techniques and tools that can be applied to their projects.

Furthermore, fostering a culture of experimentation within teams can lead to the development of novel solutions leveraging Llama’s capabilities. Encouraging team members to prototype and test new ideas can result in breakthrough applications that may not have been considered in traditional development processes. Additionally, investing in continuous learning and professional development ensures that team members are equipped with the latest skills and knowledge. Finally, collaborating with interdisciplinary teams ensures a holistic approach to AI applications, encompassing not just technical aspects but also user experience and ethical considerations. This collaborative spirit can lead to more innovative and user-centric solutions that address real-world challenges.

In conclusion, this advanced guide offers a comprehensive overview of Llama, from basic understanding to emerging trends. By mastering the techniques and best practices discussed, developers can harness the full potential of Llama in their projects, paving the way for intelligent applications that resonate with users.